Best OpenRouter Alternatives for Production AI Systems

Introduction

The generative AI landscape has exploded into a multi-model ecosystem. Today, developers cannot rely on a single Large Language Model (LLM) for all tasks; efficiency demands using the best model, whether for cost, speed, or quality, for every specific query. This pursuit of optimization, however, creates a sprawl of fragmented APIs, inconsistent billing, and complex failure handling.

Platforms like OpenRouter emerged to solve this chaos, offering a unified API layer to manage hundreds of models. Yet, as enterprise AI scales from experimentation to mission-critical workloads, developers realize the need for solutions that offer deeper control, better governance, and tighter integration with their existing MLOps infrastructure.

This shift is driving demand for next-generation LLM Gateways and Routers that provide enterprise-grade capabilities beyond simple aggregation.

What is OpenRouter?

OpenRouter is an LLM aggregator that provides a single, OpenAI-compatible API for accessing a wide range of proprietary and open-source models. Instead of managing separate credentials and SDKs for each provider, developers interact with OpenRouter using one API key and a standardized request format.

Under the hood, OpenRouter connects to multiple inference providers and exposes them through a unified interface. Developers can switch between models by updating configuration rather than rewriting application logic.

In addition to aggregation, OpenRouter supports basic routing capabilities. Requests for a given model can be forwarded to different hosting providers based on availability, pricing, or latency. This reduces vendor lock-in and simplifies experimentation across models.

How does OpenRouter work?

OpenRouter operates as an intermediary layer between applications and model providers. It does not host models itself but orchestrates requests across external inference services.

At a high level, the request flow includes:

- Request normalization

Applications send requests using a standard OpenAI-compatible format. OpenRouter translates these requests into the provider-specific formats required by the underlying model hosts. - Provider selection and routing

For a given model, OpenRouter selects an appropriate inference provider based on factors such as pricing, latency, or availability. If a provider becomes unavailable, requests can be rerouted automatically. - Unified billing and settlement

Instead of managing multiple provider accounts and invoices, developers maintain a single balance with OpenRouter. Usage is aggregated across providers and billed centrally.

This abstraction allows teams to treat multiple models and providers as a single logical interface, reducing integration overhead during development.

Why Explore OpenRouter Alternatives?

While OpenRouter is effective for simplifying access to multiple models, it is fundamentally designed as a public aggregation layer. As organizations scale AI workloads into production, this architecture can introduce limitations. For enterprises where compliance, security, and deep debugging are non-negotiable, several architectural limitations often necessitate a move toward more robust, dedicated AI Gateways.

Governance and compliance constraints

Using OpenRouter requires routing requests through a third-party proxy before they reach the model provider. For regulated industries, this additional hop can complicate compliance with frameworks such as GDPR, HIPAA, or internal data residency requirements. OpenRouter also offers limited pre-processing controls for enforcing organizational policies before data leaves the application environment.

Limited access control and identity integration

OpenRouter’s access model is optimized for developer convenience rather than enterprise identity management. It lacks deep Role-Based Access Control and native integration with corporate identity providers. This makes it difficult to enforce model-level or team-level permissions at scale.

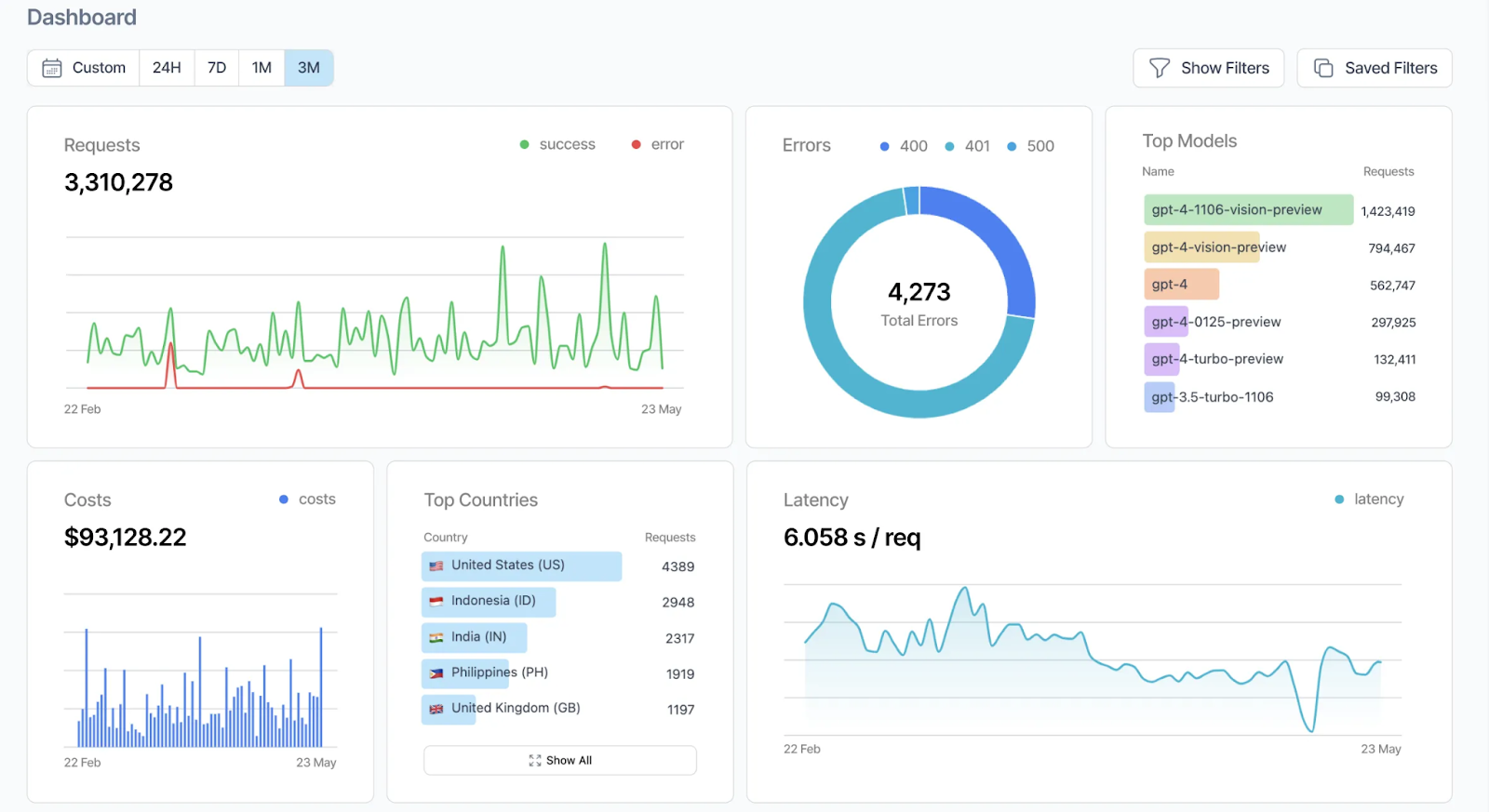

Gaps in observability and debugging

OpenRouter provides usage and billing visibility but offers limited execution-level observability. For production systems, teams often need traces that link prompts, routing decisions, latency, and model-specific failures. Without integrated tracing or easy export of telemetry into internal observability stacks, debugging complex workflows becomes operationally expensive.

As a result, many teams adopt OpenRouter during early experimentation but later transition to dedicated LLM gateways that provide stronger governance, security, observability, and deployment flexibility.

In fact, many engineering teams evaluating aggregation layers start with side-by-side comparisons like LiteLLM vs OpenRouter. While both tools simplify access to multiple LLM providers, they differ significantly in architecture, deployment flexibility, and production readiness. LiteLLM functions primarily as an open-source proxy abstraction, whereas OpenRouter operates as a public aggregation service. For production AI systems, teams often need capabilities that go beyond both—such as private deployment, advanced governance, and deep observability.

Top 5 OpenRouter Alternatives

The transition from a simple API wrapper to a production-grade AI system requires more than just a model aggregator. It requires an infrastructure layer that provides security, reliability, and advanced orchestration. Here are the top 5 OpenRouter alternatives leading the market in 2025.

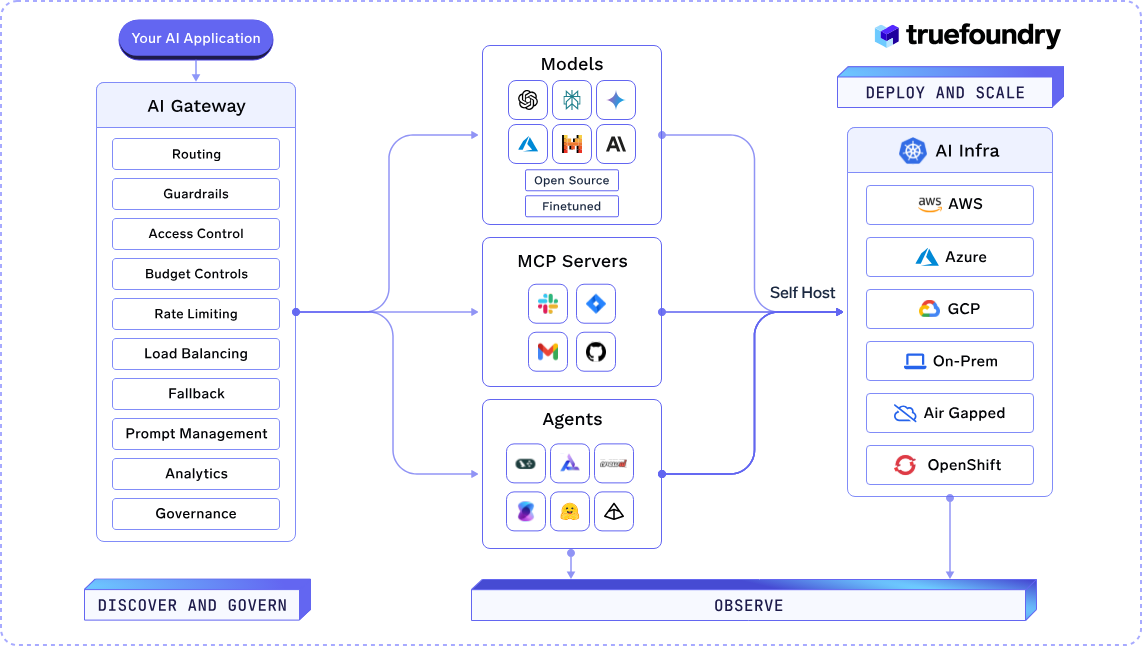

1. TrueFoundry

TrueFoundry is the leading enterprise-grade alternative to OpenRouter, specifically designed for organizations that have outgrown public aggregators and require a private, secure AI Gateway. While OpenRouter excels at providing a broad catalog of models via a public proxy, TrueFoundry allows you to deploy its gateway within your own VPC or on-premise hardware. This architectural shift ensures that your sensitive data never leaves your controlled environment, resolving the primary compliance and security hurdles faced by large-scale enterprises.

TrueFoundry's gateway is uniquely built for the era of Agentic AI. It natively supports the Model Context Protocol (MCP), allowing your agents to securely connect to internal tools and data sources with centralized governance. Its multi-model routing goes beyond simple price and latency; you can define sophisticated fallback chains, enforce team-level quotas, and use a unified AI Gateway Playground to test and version prompts across 250+ models. With integrated observability, TrueFoundry captures end-to-end traces of every interaction, making it a comprehensive control plane for the entire LLM lifecycle.

Best For:

Enterprises requiring strict data sovereignty, SOC 2 compliance, and advanced agent orchestration within their own private infrastructure.

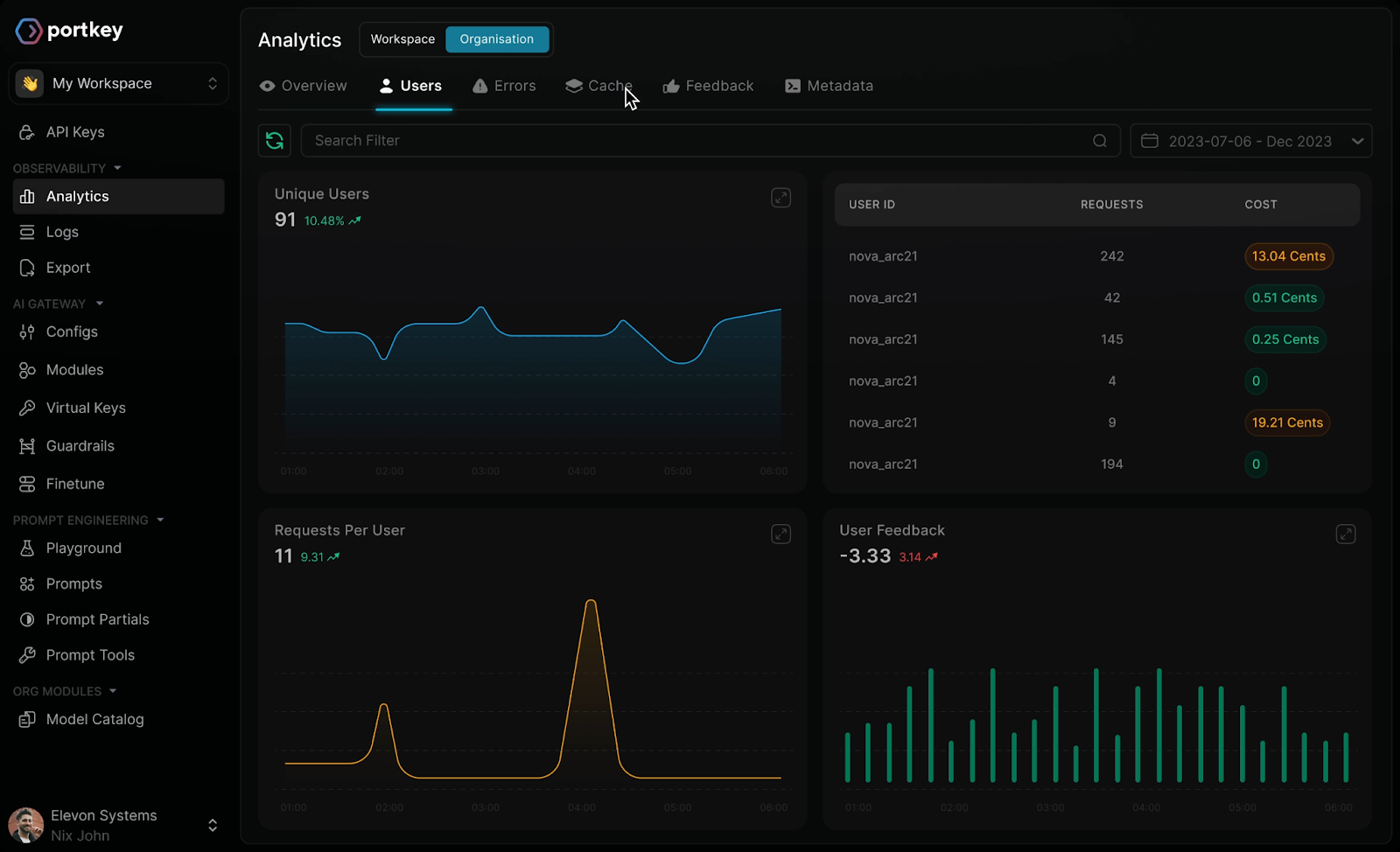

2. Portkey

Portkey is a specialized control plane designed to bring industrial-strength reliability to LLM applications. It is often the first choice for engineering teams that need to guarantee 99.9% uptime. The platform acts as a high-performance middleware that adds a layer of "intelligence" to your API calls. Its standout capability is the Config Object, which allows you to define complex routing logic such as automatic retries with exponential backoff and multi-model fallbacks, without touching your application code.

Beyond routing, Portkey is a leader in LLM Observability. It provides a "single pane of glass" to view costs, latency, and error rates across all your providers. Its Virtual Keys feature is particularly valuable, allowing you to create and manage scoped API keys for different teams or environments, ensuring that one team's experiment doesn't accidentally drain your entire organization's budget. With built-in support for prompt versioning and a collaborative playground, it bridges the gap between development and production operations.

Best For:

SRE and DevOps teams focused on building resilient, high-availability AI systems with deep monitoring and automated error handling.

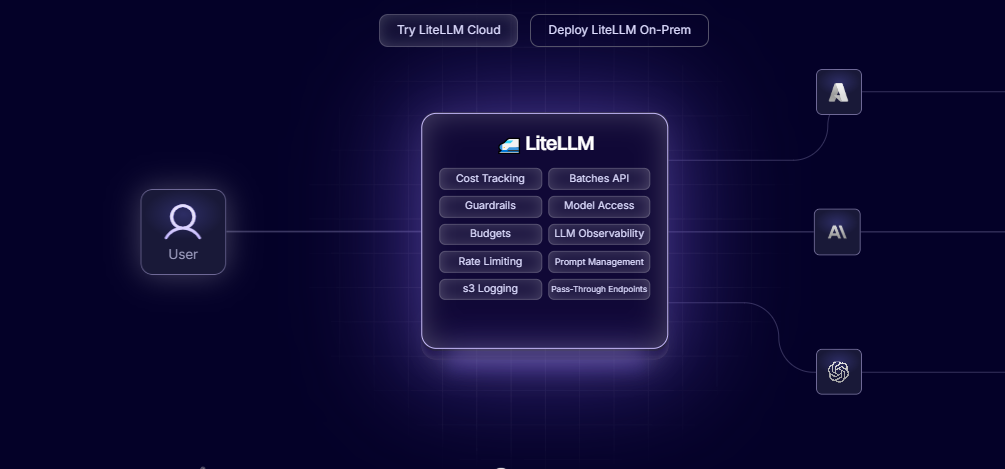

3. LiteLLM

If you prefer the flexibility of open-source software, LiteLLM is the definitive community favorite. It is a lightweight Python library and proxy server that allows you to call over 100+ LLMs using the standardized OpenAI format. Unlike the other hosted alternatives, LiteLLM is designed to be "pip-installed" or run as a container, giving you total ownership of your gateway logic. It effectively removes the "middleman" by letting you build and host your own private version of OpenRouter.

LiteLLM’s primary strength is its simplicity and neutrality. It handles the tedious work of translating different API parameters and error codes into a consistent format, making it trivial to swap models like Claude for Gemini. It also includes built-in support for budget tracking and load balancing across multiple instances of the same model. For teams building custom internal platforms or those who want to avoid any form of vendor lock-in, LiteLLM provides the necessary building blocks without the overhead of an enterprise SaaS platform.

Best For:

Developers and startups who want a customizable, open-source proxy to standardize their multi-model integrations.

4. Helicone

Helicone is the observability-first gateway that focuses on the "missing data" of the LLM lifecycle. It is widely recognized for its one-line integration; by simply changing your API base URL, you gain instant access to a suite of advanced analytics. While it offers robust routing and failover capabilities similar to OpenRouter, its true value lies in its ability to help you understand and optimize your AI spend.

One of Helicone's most impactful features is Semantic Caching. It intelligently identifies prompts that are semantically similar to previous ones and can serve the cached response instantly. This doesn't just reduce latency; it significantly slashes API costs for repetitive tasks like customer support or data summarization. Its dashboard provides granular insights into user-level costs and token usage, making it an essential tool for product managers who need to track unit economics. Helicone is also fully open-source, allowing for VPC deployments that satisfy security-conscious teams.

Best For:

Product-led teams that need granular cost attribution, semantic caching, and a developer-friendly debugging experience.

5. Kong AI Gateway

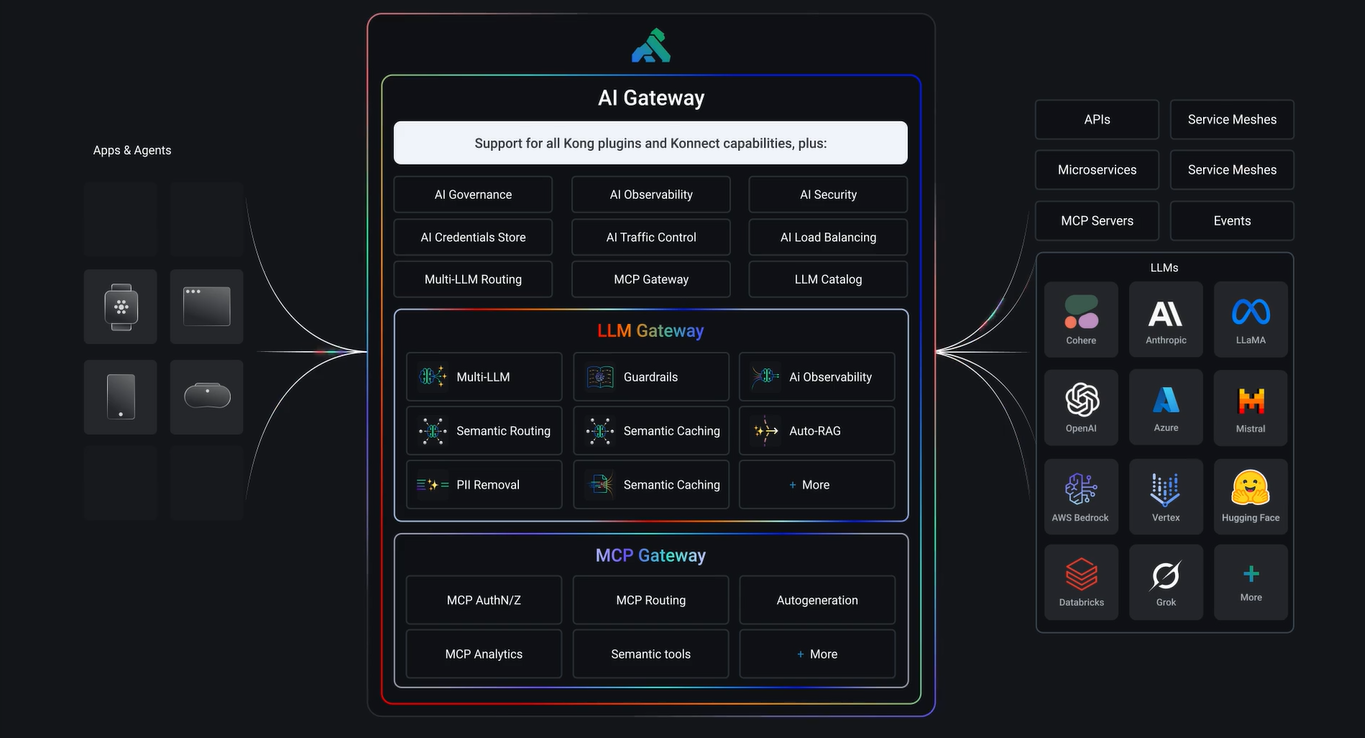

Kong is the industry standard for API management, and its AI Gateway extension is built for the complexity of the modern corporate IT stack. This is a solution for organizations that treat AI as a core component of their microservices architecture. Kong allows you to manage LLM traffic using the same battle-tested plugins used for traditional web traffic, including rate limiting, authentication, and logging.

The platform excels in centralized policy enforcement. It allows security teams to implement "AI Guardrails" globally, such as automatically detecting and redacting PII before a prompt is sent to an external provider. It also supports AI Semantic Routing, which can route a request to a cheaper or faster model based on the complexity or topic of the user's input. For enterprises already using Kong to manage their internal APIs, adding the AI Gateway is a seamless way to bring governance, security, and standardization to their generative AI initiatives.

Best For:

Large-scale organizations and platform engineers who need to manage AI traffic alongside a complex ecosystem of microservices and internal APIs.

Also explore: Kong Gateway Alternatives

Conclusion

The shift from experimental AI to production-grade applications requires a transition from simple model aggregators to robust infrastructure. While OpenRouter provides an excellent entry point for model discovery, the needs of a scaling enterprise security, data sovereignty, and granular governance eventually demand a more controlled environment. Whether you choose a high-performance gateway like TrueFoundry for its private cloud security or an open-source proxy for total flexibility, the goal remains the same: building a resilient, governed, and cost-effective AI stack that can evolve with the rapidly changing model landscape.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)